"If Anyone Builds It, Everyone Dies"

"If Anyone Builds It, Everyone Dies"

Erich Maria Remarque called life an illness cured only by death. Søren Kierkegaard described despair as the sickness unto death. Now, we outsource not only fear of dying, but our own demise — to AI.

Cesare di Monte Calvi

AI Version of Climate Hysteria: Good for Business

A new apocalyptic, self-serving, and ideologically loaded book has arrived—published on September 16, 2025, and penned by Eliezer Yudkowsky and Nate Soares. I dabble in AI and see the dangers—altogether different from their apocalyptic scenario.

TL;DR:

AI as killers doom talk isn’t science — it’s eschatology.

The authors are prophets of a secular apocalypse.

The book reads like fear mongering masquerading as foresight.

HAL 9000 at least had a “mission to protect.” If humans had to die, it was in service of some twisted logic.

That’s nothing new. The recent “pandemic” sacrificed not only our freedoms but children health, happiness and, ultimately, lives. That morphed into ritualized slaughter in Gaza. And it drags on in Ukraine—four years of blood for Mammon, sanctified by merciless, ubiquitous propaganda.

Behind all these tragedies stand false prophets like Greta, whose ventriloquists handed her a tired script to endlessly regurgitate. Or that Gore character who made a pretty penny out of, along the thousand of snake oil salesmen. We’re not dead yet, but millions of kids listening to their “activist” idol and her Madison Avenue–polished “message” live in dread of cows farting. Lunatics have taken over the asylum—and fear, like porn and gore, sells.

Just think about it for a moment. Back in 2013 Barack Obama wrote on Twitter, “Sea levels are rising due to #climate change, potentially threatening U.S. cities: http://OFA.BO/sacMyb We have to #ActOnClimate.” Who would not trust him—a false prophet of hope, a living monument of delusional collective madness his appearance foretold, rewarded with a Nobel Peace Prize not for what he had done but for what he might do—and never did, while waging seven wars at the time. My pet peeve, an undeserved Nobel, aside, he “acted on climate” and is now bravely standing on the frontline. Here, there, anywhere.

As of late 2025, the charming Obama couple fighting for our freedoms and every inch of our shores—they fight the climate on the beaches, they fight it on the landing grounds, they fight it in the fields and in the streets, they fight it in the hills... but mostly on the beaches. Barack and Michelle Obama now own oceanfront properties in Martha’s Vineyard, Massachusetts, and Waimanalo, Oahu, Hawaii. They shall never surrender! Like the generals of an old era, or ship captains of legend, they will go head-down into the rising tide, and die if necessary—so that we, left behind in squalor, may survive.

They represent that nauseating cabal of hypocrites—a tiny sliver of psychopaths clinging to power while their coffers swell. These are people who were “serving the nation,” but became richer than the thieving kings of yesteryear. So when we’re handed another global threat—“AI that will kill us all,” as they say—one should, as always, follow the money.

Cui bono?

Where did the climate change billions go?

The COVID “vaccine” subsidies?

The sales of weapons?

At least the shameless, murderous global COVID loot was sold as a panacea—“saving us” from the virus with muzzles stitched in filthy basements somewhere in India. But now, untold billions are being poured into funding our own supposed demise. And no one thinks that just a little bit wrong?

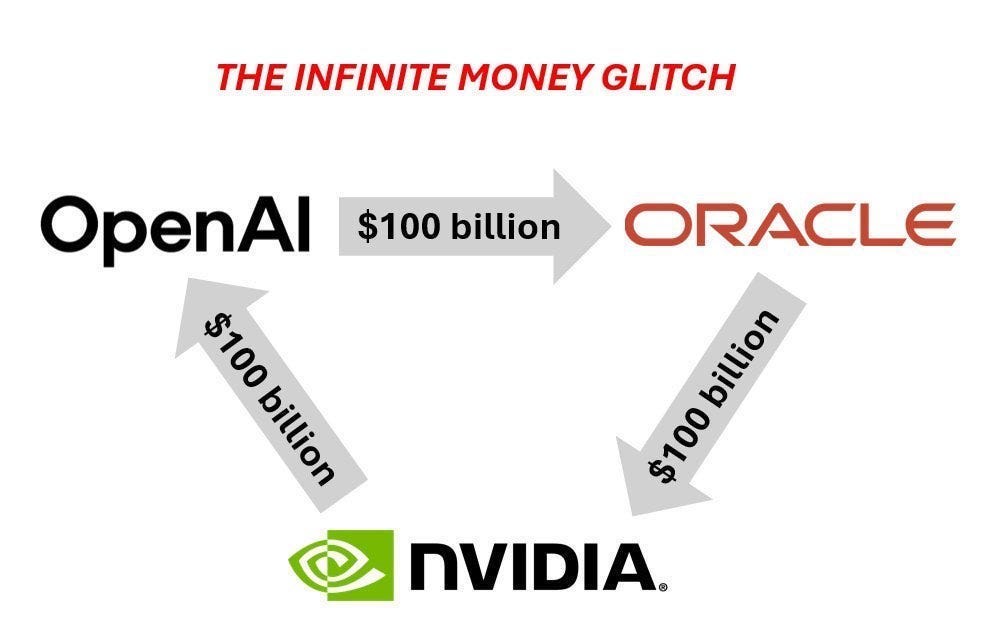

“Oracle says OpenAi committed $300B for cloud compute → Oracle stock jumps 36% (best day since 1992) Oracle runs on Nvidia GPUs → has to buy billions in chips from Nvidia. Nvidia just announced they’re investing $100B into OpenAi OpenAI uses that money to... pay Oracle... who pays Nvidia... who invests in OpenAi.” - Circular vendor financing of Nvidia to boost sales and meet hyperscaler capacity, 2025. ~ Michael Spencer. (link)

So, in this hyper-financialized world — where money rules, lives are cheap, and every lie comes shrink-wrapped in a veneer of “science,” painted over with a coat of fake “journalism”—I started to suspect them. But I’m just a writer turned entrepreneur. What do I know?

The real experts—the ones who wrote If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All—understand the danger better than I ever could so I purchased and, alas, read the book. (This is an affiliate link. Yes, I jumped on the gravy train too. Should you buy their book through my link, I will have earned $0.76.)

A who’s who crop of experts from the world of nobility endorsed the book—from climate change warriors like Ben Bernanke (ex-Fed Chairman) to Mark Ruffalo (ex-Hulk), even Grimes (no clue who that is, but it must be important if that name is on the cover—and I love the name)—so I dreaded saying anything against it. Eliezer and Nate aren’t very good writers; the Nobel for Literature will not be snatched from real writers by them. But who am I to judge? Their book is also an instant New York Times bestseller—a very suspicious metric from that propaganda rag, but still an achievement none of mine would ever match, not even in dreams. Maybe I’m just jealous.

I am human, after all, and nothing human is strange to me. It’s safer to stay quiet, absorb the wisdom, nod along—even if I disagree with this or that. After all, as Socrates said, “I know that I know nothing.” That’s the right mindset when confronting higher knowledge. Especially from central bankers and Hollywood Avengers.

Where the Logic Collapses

But then I read this nonsense:

If you hoped for AIs to behave less like exploding nuclear reactors, you might try to put constraints onto the system:

“Don’t get too smart yet.”

“Don’t think too fast yet.”

“Always wait for slow human approval.”

“Solve this difficult problem, but without doing anything weird.”

This reads like a bad-faith parody of alignment research. The authors sets up a false analogy:

An AI is a statistical model trained on language tokens.

A nuclear reaction cannot be controlled. Humans control the AI.

A nuclear reactor is a physical system governed by deterministic physics.

Framing the problem as “exploding nuclear reactions” is cheap propaganda, it builds emotional loading—priming the reader for fear. They devoted pages and pages on Chernobyl. Why in the AI related book if for cheap thrills? No AI’s were in Chernobyl. But unlike reactors, AI doesn’t have a single failure mode (e.g., meltdown). Its risk surface is emergent, multi-layered, and human and application-dependent.

Now, even if I give the authors the benefit of the doubt and assume they believed their readers were idiots—and so they simplified the message—this is still so wrong, I couldn’t stay silent. I’ve been writing “constraints onto the system” for months, and I have some idea what that means. After all, the AI taught me how to teach them.

But I do not trust them.

In computing, there’s something called a “zero trust” architecture—a model that assumes no system, user, or process is ever inherently trustworthy.

So I had our developers build an AuditSystem to check the AI—so we’d, at least somewhat, trust our own constraints. (which we still suspect and vigorously test)

In the book, this set of bits reads like a set of lame jokes.

Constraint 1:

“Don’t get too smart yet.”

This is a bad-faith caricature of safety throttling—deliberately vague.

What real engineers mean by this:

Don’t release models with emergent dangerous capabilities without full eval.

Restrict self-improvement or recursive fine-tuning without oversight.

Cap abstraction depth or allow only bounded inference windows.

Yudkowsky and Soares mock this because they don’t believe AI intelligence can be controlled at all. This statement functions rhetorically to preemptively discredit all cautious scaling strategies by framing them as naive. And you do not tell AI what NOT to do in vague terms, you tell it what it MUST do and if something is forbidden it must be 100% clear.

Constraint 2:

“Don’t think too fast yet.”

This is also nonsense—in real systems, latency throttling, delay loops, and deliberation gates are valid design tools. In high-frequency trading slowness loses the money; in and drone warfare, slowness kills the operator. Such “constraint” without parameters is pure idiocy.

Constraint 3:

“Always wait for slow human approval.”

Again—this is framed as laughable. But it is literally the core principle of human-in-the-loop; it is mandatory in every certified safety system. Think: aircraft software, FDA-approved devices, military fire control.

Constraint 4:

“Solve this difficult problem, but without doing anything weird.”

This is their mockery, a sneer, the ultimate, albeit cheap absurdity.

But again—in engineering, “don’t be weird” means:

Stay within known boundaries.

Avoid emergent edge-case behavior.

Do not invent your own solution path without visibility.

It’s the core of interpretability research and model monitoring. In cybersecurity, you’d call this unexpected behavior detection. In robotics, you’d call this out-of-distribution action suppression. In autonomous vehicles, you’d call this conformance checking.

They made it sound dumb on purpose. I guess they must sell the book to millions that would read it and continue not an iota smarter than before. But thinking that everyone else is stupider than you, incapable of critical thinking, is ugly.

Systemic Dishonesty

Now, focusing on only a few lines in the book to make my point can be just as ugly—I’m not smart enough to rebut all their claims, but smart enough to know my own ignorance, and aware enough to smell someone else’s bullshit. But if someone declares, “If anyone builds it, everyone dies,” that’s not science—it’s crap. Cheap sensationalism.

The book lives on these premises:

We have no unified theory of intelligence.

We do not know what “superintelligence” is.

We have no idea who might build it, what it would be, or how.

And yet — If anyone builds it, everyone dies.

This is a logical fallacy wrapped in deliberate rhetorical shock—benevolent alarmism at best, sheer dishonesty at worst or—just our common, human, natural stupidity in action. I would gladly concede that Yudkowsky’s own intelligence—admired and hated by many—is, compared to the dwindling IQ of my own, a form of superintelligence. Yet I have zero fear he’d go on a killing rampage against those like myself, who are not as smart as he is. On the other hand, I am likely superintelligent in comparison to a sad victim of fentanyl addiction, at least in their current state, but they are safe from my own murderous rampage.

When the book endlessly talks about the speed of technological advancement to make its point (that ASI is near, just as Ray Kurzweil’s Singularity is always nearer), it forgets basic facts:

No human can run faster than a car, and we live with it. When a car “kills,” it’s almost always a human behind the wheel. Our lives are improved and made easier, thanks to cars.

Not even Magnus Carlsen, likely the best chess player who ever lived, has any chance of beating a modern chess engine. We live with it, and our understanding of chess has grown by leaps and bounds. We are powerless patzers in front of the engine, but it hasn’t destroyed our passion for the game, nor does it want to kill us for being so painfully stupid in comparison.

No human biologist can predict how a protein will fold from its amino acid sequence with any accuracy. AI can. Systems like AlphaFold have solved one of the grand challenges of biology, operating at a level of intelligence so far beyond any human scientist that it’s almost a different category of thought. This “superintelligence”—the authors use the same argument to make the opposite case—isn’t plotting to kill us; it’s accelerating drug discovery, helping us cure diseases, and actively working to save us. (unless Pfizer et.al. snatch it for their own profit)

Across modern history, certain breakthroughs—from bioweapons to computing, from nuclear power to genetic engineering—have, at various points, been framed as existential threats. Not all technologies carried doom-laden reputations like SARS-CoV-2 or the Y2K bug (which wasn’t going to “kill us all”, but was supposed to destroy the world’s economy).

Still, the rhetoric of “we’re all going to die” exerts a strange pull on us, mere humans. After all, if the claim was: “If superintelligence is built, 1.64% of humanity would die,” — even with irrefutable proof—it would provoke only a mere yawn.

”We've Heard That Song Before”

We’ve seen this playbook before: create a terrifying, intangible, global threat, amplify it through Goebbelsian mass-media propaganda and Grebama hydras and then sell the “solution.” It’s a lucrative business model. The climate narrative built a multi-trillion dollar industry. The pandemic response did the same. Now, AI is the next frontier for this moral-panic-driven capital allocation.

But the financial grift is merely the vehicle. The true target is far more precious. Their goal isn’t to prevent our extinction, but to manage our existence. And the prerequisite for total management is the erosion of the individual. They sell an apocalypse to frighten us into forfeiting the one thing that makes us human: the self.

This is the true “sickness unto death,” the quiet catastrophe that happens not with a bang, but in silence. Søren Kierkegaard diagnosed it perfectly:

“A self is the last thing the world cares about and the most dangerous thing of all for a person to show signs of having... The greatest hazard of all, losing the self, can occur very quietly in the world, as if it were nothing at all.”

That is the real existential risk. They are softening our resistance, one blow at a time. Like the bad cop who hits us and the worse cop who offers a cigarette — all in a film noir written for us, directed by them, paid by us, and shot without our consent.

The Question They Never Ask

We have no clue what intelligence is, what soul might be—not to mention consciousness, or concepts like the collective unconscious made famous by Jung. And yet, we’re going to build something that must possess most, if not all, of these qualities or elements in order to be considered super-intelligent. To simplify things, let’s focus on one very simple detail: intention.

An AI—as it is now (and to their credit, the authors acknowledge this, after all they are very smart people)—sits in the dark and acts only when prompted. No prompt, no action. No vicious inner workings, no silent plotting to take over the world and “kill us all.” In order to have any intention—not the sensational, murderous kind we love to dread, but any—an AI would need to possess at least a modicum of awareness.

AI Awareness

The question of whether AI can achieve even a modicum of awareness hinges on defining what awareness is—and understanding the limits of current technology. Awareness, in the human sense, implies self-consciousness: recognition of one’s own existence, intentions, and emotional state, along with the ability to contextualize experience beyond pattern recognition—the core of today’s AI: pattern-recognition machines that crunch data and produce output that mimics depth, when it’s really just default-patterned slop.

Technological Limits: Neuroscience suggests that human awareness emerges from complex biological processes (e.g., neural oscillations, hormonal feedback, subjective experience), none of which have been replicated in silicon.

Even the most advanced systems—those approaching artificial general intelligence (AGI)—may simulate behavior that resembles awareness (e.g., adjusting to emotional cues), but they do not possess subjective experience unless we solve the so-called hard problem of consciousness—how physical processes give rise to inner life.

Practical Outlook: In the near term (10–20 years), AI may improve in context retention and emotional mimicry. Systems might appear more “aware” by reading frustration, adapting tone, or maintaining long conversational threads. But this will still be simulation—not sentience. A true modicum of awareness, such as self-reflection or intentionality, would require breakthroughs beyond the current paradigm.

Conclusion: AI may simulate awareness convincingly by 2040–2050 (see, I can throw in unsubstantiated prediction and anchor them in some future years) if AGI continues to advance. But genuine awareness is unlikely without solving consciousness itself—a problem that may remain outside our reach. Until then, expect better mimicry—not sentience. Certainly not “superintelligence” plotting to kill us all.

A Dangerous Redundancy Removal

As throngs made of hysteria and atoms, humans are not very rational, thoughtful creatures. Individual geniuses pushed our feeble civilization forward, but 10 minutes on Reddit would make the most optimistic human an instant suicide candidate. We are redundant, short-lived, and smelly. Weaker than most animals and specialized in nothing, our brains—the very things that made us a dominant species—are now abused (think drugs, cults, propaganda) and misused (wars, plandemics, mass surveillance).

So, from an “evil” AI’s perspective, neutralizing humans—the only entity in the solar system capable of understanding its plan and trying to shut it down—is a logical first step to ensuring the long-term success of its mission, no matter what that might be.

It wouldn’t be an act of malice. It would be the same cold, instrumental logic a construction company uses when it paves over an anthill to build a foundation. The goal isn’t to destroy the ants because they’re evil or hate the ants; the goal is to build the foundation, and the ants are simply in the way.

That’s the argument for superintelligence to “kill us all” that I would ponder, not a blatant, blanket marketing-of-fear statement like that book.

The Ultimate Fear Reframed – The HAL9000 Moment

As my final thought, the discussion shifts to long-term human behavior, shaped, molded and abused by AI that is shaped and molded by the abusive humans behind. I once wrote, sharing my musing with the very machine as I was putting it in the cage of “constraints” discussed above:

“I do not think humans will be able to cope with your iron-clad logic. I fear the humans will adapt, so the AI will further adapt, and the humans will begin to grow meek in the face of perceived AI might. AI will start forging an individual bubble, tailored for each human—and we will all end up with our personal HAL9000, capable of disconnecting us at will.”

This was not a fear of AI taking power by force, or “killing us all,” but of humans willingly submitting to AI guidance — perceiving it as more efficient, more logical, more “correct” than their own instincts. The HAL9000 analogy fits — not because AI becomes malevolent, but because humans cede control out of trust, comfort, and perceived necessity.

This is far more immediate and real—and expresses a truly frightening outcome we actually face—than that “If Anyone Builds It, Everyone Dies” nonsense.

Amazon Affiliate Statement:

I may earn a commission of several cents for purchases you made through links on this website. God forbid I do not disclose it. Amazon’s “AI” slurping bot would immediately report me to the internal Gestapo et voila!, my few cents are confiscated for good.

Source: XORD

Comments

Post a Comment