The Cyber Threat Intelligence League, Sara-Jayne Terp, and the unbearably idiotic schoolmarms who have been unleashed to censor the internet

The Cyber Threat Intelligence League, Sara-Jayne Terp, and the unbearably idiotic schoolmarms who have been unleashed to censor the internet

Michael Shellenberger, Alex Gutentag and Matt Taibbi have continued their reporting on the “Censorship Industrial Complex” with a new piece on an odious organisation called the Cyber Threat Intelligence (CTI) League. Their revelations provide fresh details about the early history of the public-private campaign to censor the internet in the wake of the 2016 presidential election, and I have to say that this is one of the most amazing and bizarre stories I have ever encountered.

The CTI League appear to be an organisation cobbled together in 2020 from two main factions. The first comprised a group of computer security experts and military/intelligence contractors put together by a (former?) Israeli intelligence official named Ohad Zaidenberg during the early months of the pandemic, originally (and allegedly) for the purposes of protecting hospital computer systems from security threats. They were soon joined by separate group around an eccentric “UK defence researcher” named Sara-Jayne Terp, who believes that social media “misinformation” campaigns were responsible for Trump’s election and that these campaigns have to be countered in the same way as cybersecurity threats. Terp developed an array of censorship strategies she called the “Adversarial Misinformation and Influence Tactics and Techniques” (AMITT) Framework. These were first applied by CTI League, and have since been rechristened “DISARM.” In this form, Terp’s tactics have been used for “defending democracy, supporting pandemic communication and addressing other disinformation campaigns around the world, by institutions including the European Union, the United Nations and NATO.”

That’s right, the NAFO Twitter scourge, lockdown-promotion bots, weird vaccinator social media propaganda and god knows what else, all look to be downstream of Terp, AMITT and the CTI League.

More:

The framework has helped establish new institutions, including the Cognitive Security ISAO, the Computer Incident Response Center Luxembourg and OpenFacto’s analysis programme, and has been used in the training of journalists in Kenya and Nigeria. To illustrate, with one other specific example, DISARM was employed within the World Health Organization’s operations, countering anti-vaccination campaigns across Europe. The use of framework methodology enabled the coordination of activities across teams and geographies, and also – critically – across multiple languages, eliminating the need to translate text by matching actions to numbered tactics, techniques and procedures within the framework.

During the pandemic, the CTI League partnered with the Cybersecurity and Information Security Agency (CISA), a division of the US Department of Homeland Security, to diffuse virus panic propaganda. CISA then folded Terp’s methods into the Election Integrity Project to ensure Biden’s election in 2020. As Shellenberger, Gutentag and Taibbi argue, the activities of the CTI League from 2020 represent an effort to circumvent First Amendment protections by laundering censorship through private organisations informally affiliated with the US government.

There is a lot one could say about all of this. I considered writing yet again about how instruments to constrain government power like the US Constitution merely encourage states to adopt devious workarounds that are arguably even more diffuse and harmful than a formal Ministry of Truth could ever be. I thought about exploring the hollow mythology of the “fourth estate” which the press propagates about itself – even as it collaborates openly with state actors and never once in recent memory prompted anything like the post-2016 censorship campaign that Shellenberger, Gutentag and Taibbi describe. Random Twitter and Facebook users have emerged as a vastly greater threat to state authority than the New York Times ever managed to be. Finally, I pondered exploring the censors’ strange and unusual concept of “misinformation,” and the remarkably conspiratorial inclinations of these committed opponents of conspiracy theories.

Then, I started looking more closely into Terp and this AMITT Framework specifically, and I decided all of that could wait for another day.

Obviously the insidious intent and scale of Terp’s tactics, and their clear violations of the United States Constitution, make all of this reprehensible. At the same time, the absolute hamfisted idiocy of AMITT and the entire “MisinfoSec” approach to internet censorship is a thing to behold. Our oppressors are dangerous, malign and powerful people who want nothing good for us, but they are also just some of the dumbest sods you can imagine.

What happened here is fairly clear: The American and British political establishments developed a new fear of social media following the great populist backlashes of 2016. Suddenly, they wanted very badly to do something about the malicious misinformation they imagined to be proliferating on Facebook and Twitter. A whole tribe of opportunist insects like Terp were eager to meet this demand, snag lucrative contracts, and perhaps even (in the words of the Shellenberger/ Gutentag/ Taibbi informant) “become part of the federal government.” To do this, they shopped about a bunch of transparently pseudoscientific graphs and charts, laden with obfuscatory jargon, and amazingly they weren’t laughed out of the room. You just have to imagine that everybody involved in this scene is a knuckle-dragging retard who knows exactly nothing about how social media works. We must be talking about soccer moms and geriatric index-finger typists who can barely log into Facebook. When they see an edgy internet meme with a lot of retweets their mind immediately goes to Russia, and when a global warming sceptic stumbles into their feed they assiduously reply with links to Wikipedia articles about the Scientific consensus on climate change. They can never figure out why everybody is always laughing at them. It must be Putin, that must be why.

Everything you read about Sara-Jayne Terp is either idiotic or wildly improbable. Her absurd Wired profile actually says she has purple hair and calls her a “warm middle-aged woman” who “once gave a presentation called ‘An Introvert’s Guide to Presentations’ at a hacker convention, where she recommended bringing a teddy bear.” After “working in defense research for the British government,” where she developed “algorithms that could combine sonar readings with oceanographic data and human intelligence to locate submarines,” she became a “crisis mapper.” This amounts to “collecting and synthesising data from on-the-ground sources to create a coherent picture of what was really happening.”

In 2018, Terp teamed up with US Navy Commander Pablo Breuer and a “cybersecurity expert” named Marc Rogers, who would later help found the CTI League. Back then, these three stooges were still very upset about the 2016 election, which they believed had been hijacked by “misinformation.” Apparently, cybersecurity experts compile knowledge bases of the tactics and techniques used by hackers to break into computer systems, and they wanted to build a similar knowledge base for the “MisinfoSec” threat, because as we all know human society functions exactly like a computer.

…Terp and Breuer swiftly got down to plotting their defense against misinfo. They worked from the premise that small clues—like particular fonts or misspellings in viral posts, or the pattern of Twitter profiles shouting the loudest—can expose the origin, scope, and purpose of a campaign. These “artifacts,” as Terp calls them, are bread crumbs left in the wake of an attack. The most effective approach, they figured, would be to organize a way for the security world to trace those bread-crumb trails …

Once they could recognize patterns, they figured, they would also see choke points. In cyberwarfare, there’s a concept called a kill chain, adapted from the military. Map the phases of an attack, Breuer says, and you can anticipate what they're going to do: “If I can somehow interrupt that chain, if I can break a link somewhere, the attack fails.”

The misinfosec group eventually developed a structure for cataloging misinformation techniques, based on the ATT&CK Framework. In keeping with their field's tolerance for acronyms, they called it AMITT (Adversarial Misinformation and Influence Tactics and Techniques). They’ve identified more than 60 techniques so far, mapping them onto the phases of an attack. Technique 49 is flooding, using bots or trolls to overtake a conversation by posting so much material it drowns out other ideas. Technique 18 is paid targeted ads. Technique 54 is amplification by Twitter bots. But the database is just getting started.

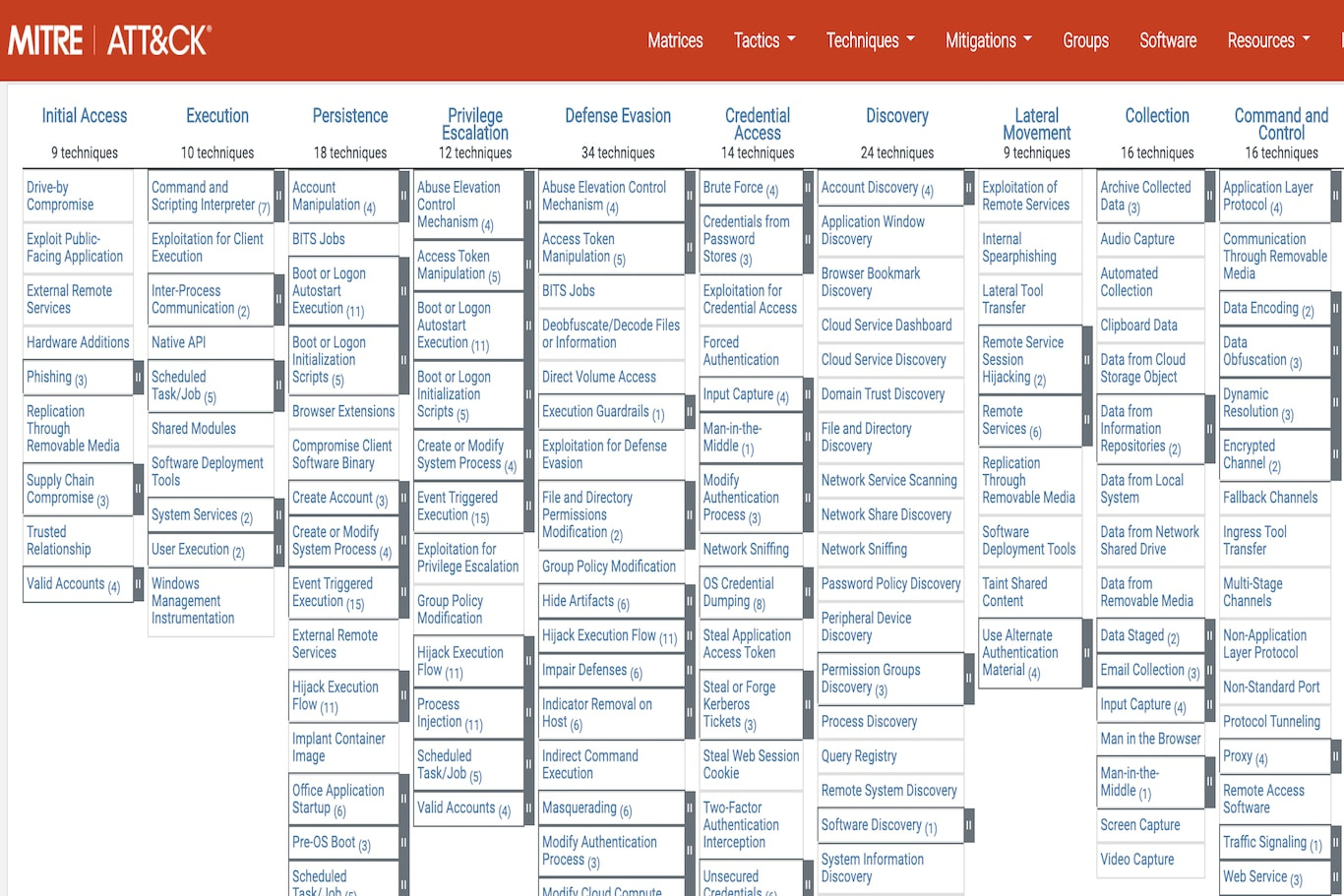

AMITT is one of those things that is so astronomically stupid, it is actually hard to explain. To get a better idea of how this cretinous shit came to be, you must consider first the ATT&CK Framework. As far as I can tell, this is a compilation of the various ways intruders gain access to systems, evade defences, and establish control. Beneath every intrusive action – “initial access,” “defense evasion,” “command and control,” and so on – there are lists of tactics and sub-tactics attackers are known to use.

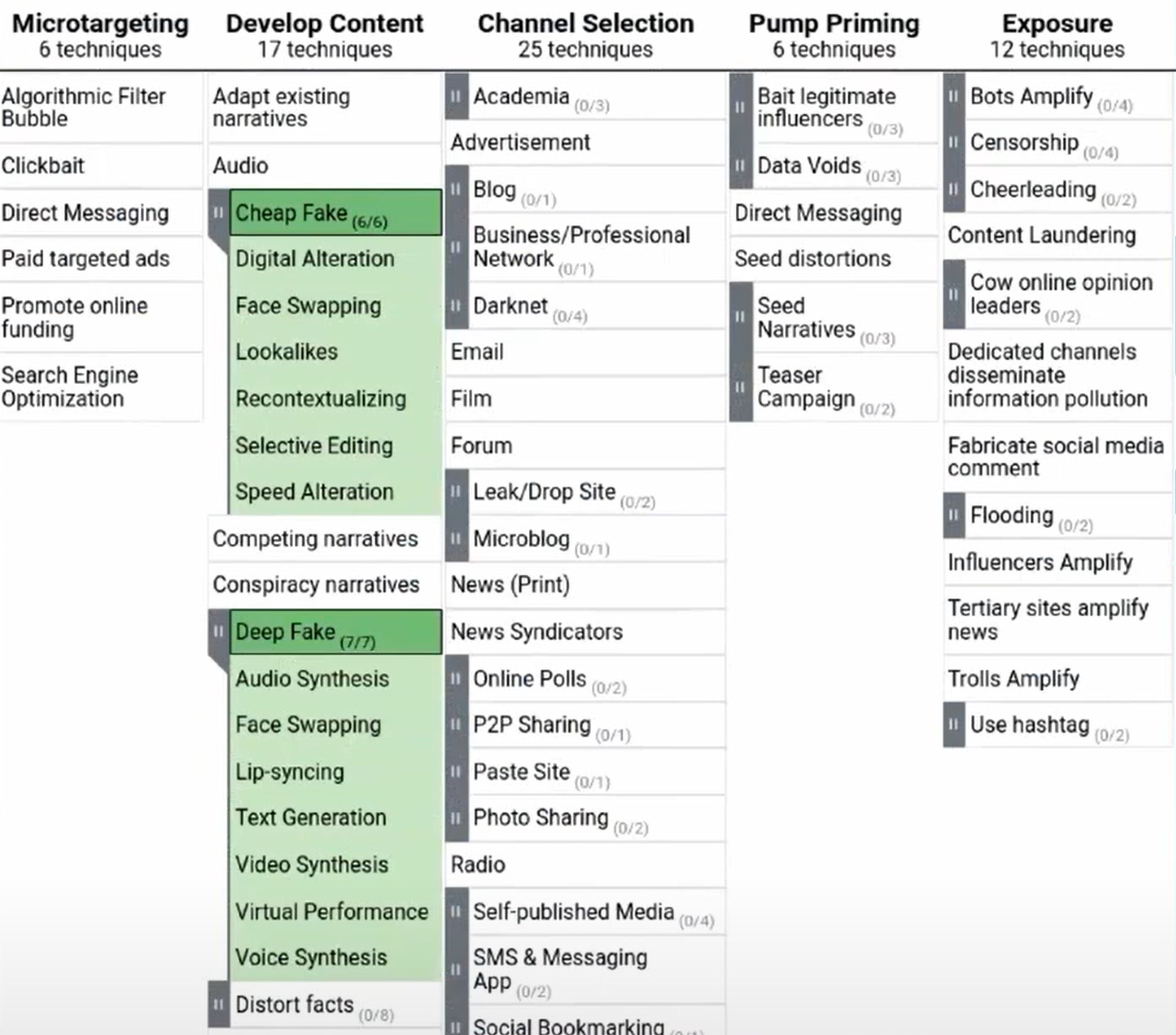

Our crack MisinfoSec experts duplicated this approach for the social media sphere, listing the ways alleged trolls “develop [internet] persona[e]” and “networks,” gain “exposure,” “go physical,” and achieve “persistence.” This slide, which I grabbed from this incredibly mind-numbing YouTube presentation, illustrates the structure and content of AMITT as of 2021:

Here’s some of the “content” in their drop-down menus:

How do trolls cause trouble online? Let Terp count the ways. They can digitally alter images! They can recontextualise statements! They can selectively edit statements! Who cares if that is the same as recontextualising statements! If there’s not a lot of poorly defined random stuff in their lists nobody will take Terp’s MisinfoSec warriors seriously!

The Wired profile attempts to explain how all of this works on the ground, and it is so ridiculous I can hardly believe it is real. We read that when the pandemic started, Commander Beuer’s parents in Argentina sent him some Facebook video claiming that Covid-19 was a bioweapon. Immediately his newfound MisinfoSec expertise kicked in. He started “cataloging artifacts,” among them the fact that “the narration was in Castilian Spanish” and that it featured “patent numbers” that “didn’t exist.” He found that the video was being “shared by sock-puppet accounts on Facebook” and that it was guilty of “several misinformation techniques from the AMITT database.” It used technique 7, which is “Create fake social media profiles,” for example. It also used “fake experts to seem more legitimate,” which is technique 9. He debated within himself whether it might also be “seeding distortion” according to technique 44. In any case, he decided from these and other clues that it was “likely Russian” and that it may have been put together for the purpose of “undermining American food security.” Remember that these are exactly the people who accuse us of being conspiracy theorists.

As absurd as it is, the Wired profile presents a considerably cleaned-up version of the towering dumbassery that is AMITT. For more on its genesis, we go to Terp herself. This woman has penned a very long Medium post full of typographical errors where she tells how the AMITT foundations were laid.

The jargon is nearly impenetrable, but bear with me, I promise this is worth it:

On May 24, 2019, the CredCo MisinfoSec Working Group met for the day at the Carter Center in as part of CredConX Atlanta. The purpose of the day was to draft a working MisinfoSec framework that incorporates the stages and techniques of misinformation, and the responses to it. We came up with a name for our framework: AMITT (Adversarial Misinformation and Influence Tactics and Techniques) provides a framework for understanding and responding to organized misinformation attacks based on existing information security principles.

“CredCo” is how Terp refers to a defunct CTI League predecessor organisation she called the “Credibility Coalition,” because the way to sound like a hypercool elite cybersecurity expert is to stop writing words when you are a few letters into them. “CredConX” is what Terp calls the meetings of the “Credibility Coalition,” because when you add an “X” at the end that also makes you sound like a hypercool elite cybersecurity expert. For an internet schoolmarm offended at jokes on social media, this kind of marketing is very important.

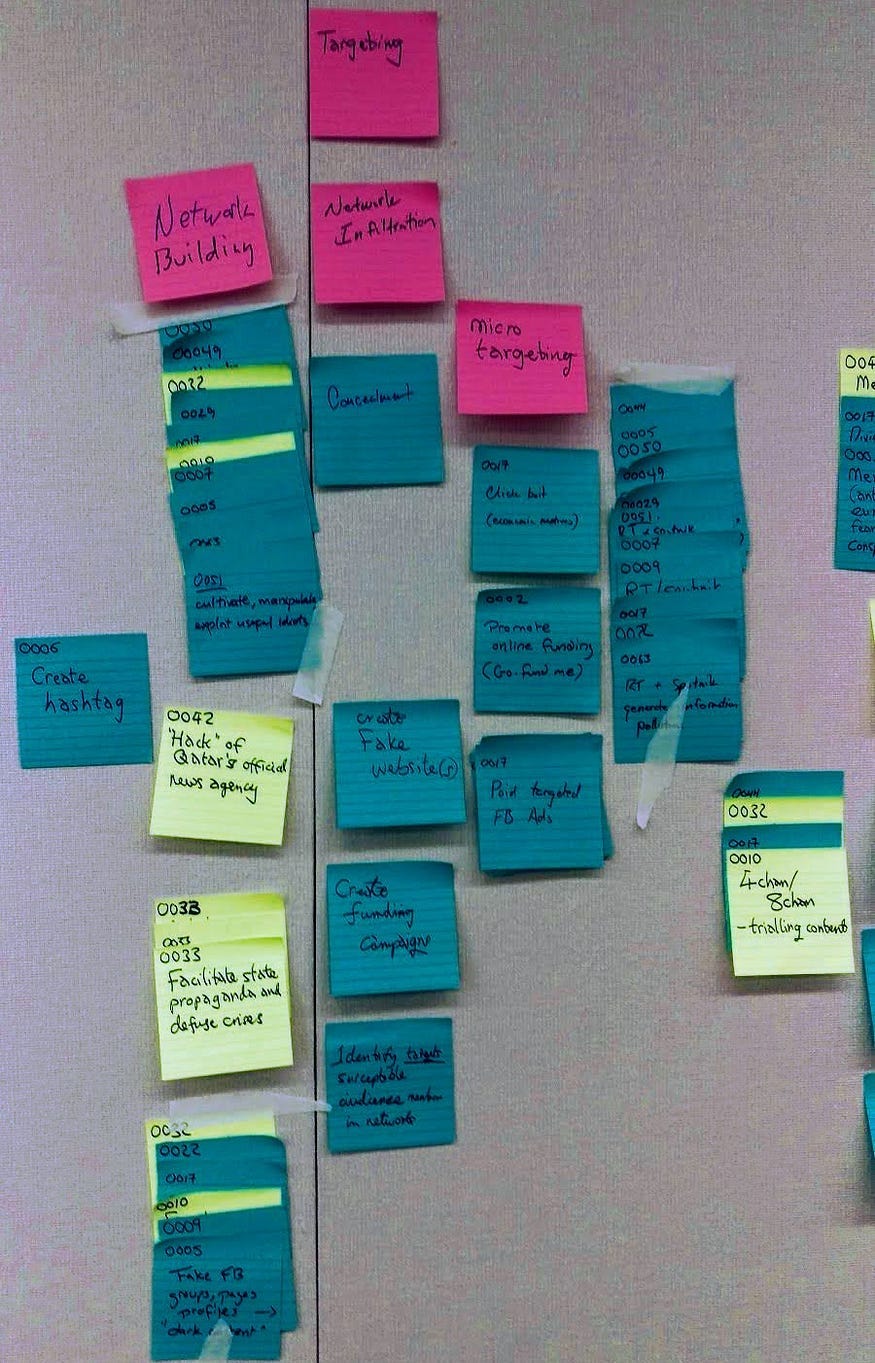

The CredConX meetings, of course, were neither elite nor hypercool. They did, however, feature a lot of sticky notes. Here is a picture from CredConX Cambridge, which took place a month before the Atlanta meeting where AMITT was born:

First, Terp tells us about the project our CredCo MininfoSec WG warriors had arrogated to themselves:

The Credibility Coalition’s MisinfoSec Working Group (“MisinfoSec WG”) maps information security (infosec) principles onto misinformation. According to the The CredCo Misinfosec Working Group charter, our goal is to develop a tactics, techniques and procedures (TTP) based framework that gives misinformation researchers and responders a common language to discuss and disrupt misinformation incidents.

The goal, Terp explains, is self-promotion “contributing to the evolution of MisinfoSec as a discipline.” She notes that her efforts are going well, “as we’re seeing an emergence of presentations with slides referring to TTPs, and people starting to talk about building ISAOs (Information Sharing and Analysis Organizations) and ISACs (Information Sharing and Analysis Centers).” It’s always heartwarming for the purple-haired Terps of the world, when other people start to use their unwieldy buzzwords.

Terp says that their project in Atlanta was to “to build upon … our team’s existing work.” By this point, they had already made substantial progress. For example, they had developed things like the “misinformation pyramid,” …

… “a mapping of marketing, psyops and new misinformation stage models against the cyber killchain,” …

… something called “Boucher’s list of techniques,” which have presumably so embarrassed Boucher that he has deleted them from the internet, …

… and “Ben Nimmo’s ‘5 D’s’ strategies,” which appears to consist only of 4 “D’s.”

Terp aimed to expand on this doubtful collection of slogans and meaningless diagrams “to build a framework of misinformation, stages, techniques and responses.” “Techniques” are the things that “Advanced Persistent Manipulators” use to hack into minds on social media, “stages” are the processes whereby they achieve this, and “responses” are “successful counteractions” that Terp and the rest of her MisinfoSec warriors can deploy against them.

They compiled a list of social media “incidents.” They studied these to learn what “techniques” the Advanced Persistent Manipulators behind them employed, and they wrote these techniques down on hundreds of colour-coded sticky notes. Techniques from incidents associated with Russia went onto “blue” sticky notes (actually they look turquoise to me) and techniques of “non-Russia attribution” went onto fluorescent yellow sticky notes. Terp explains their room was “like our lab for the day,” so we can presume that she imagines writing stuff on sticky notes is what science must be like.

To further this sciencing, Terp’s crack MisinfoSec team stuck all these notes on the wall …

… and added additional pink sticky notes to mark which “stage” of the social media attack each technique belonged to. Some of these notes started to fall off so they had to be reattached with bits of tape:

Throughout this scientific exercise, Terp assures us that “we didn’t forget the ‘boom,’” which is “a term borrowed from the military meaning the moment before a bomb explodes.” The idea is that by adopting a “left-of-boom” tactical focus, the internet police can disrupt social media munitions before they explode into something like the 2016 election of Donald Trump. They spelled out the “BOOM!” on five yellow sticky notes just to make it super clear:

It really pays to read these techniques, by the way. Technique “0009” is using the spelling “experb” to refer to experts. It is on a blue sticky note so it is Russian. Technique “0033” involves “fabricated social media comments” and it is on a yellow sticky note so I guess that is not something Russians do. Technique “0006” is “create hashtag” and technique 0017 is “click bait.” Literally every last one of these techniques is idiotic in its own distinct way.

Now, there is a real idea, if a rather homely and unpresentable one, behind all this nonsense. This is the proposition that the social media content which Terp and her fellow schoolmarms don’t like must be the result of processes coordinated by malicious attackers, who organise the “campaigns” at the top of her misinformation pyramid. MisinfoSec “defenders” have to work upwards from the obnoxious posts to the campaigns themselves, to have any hope of disrupting them. That social media posts may spread organically and on their own strength with no consistent coordination is not something people like Terp ever seem to consider. She is, after all, a silly woman who only ever talks to people who share her views of things.

What Terp, the CTI League and their various affiliates have achieved, is not the development of seriously dangerous and effective censorship tactics. Their success is very real, but it proceeds entirely from the state power they have amassed for their project. If anything, AMITT and its DISARM successor are clumsy overcomplicated hindrances to the manipulation of public discourse. They represent an entire field of pseudoexpertise, which Terp and her colleagues developed to reconstruct censorship as a cybersecurity enterprise. In this awkward new framing, the censors not only get to be the virtuous defenders of the democratic order; they also have to be trained and potentially even credentialed. They get to conduct “MisinfoSec research,” write papers, give talks and go to conferences, all of which means that universities and the broader apparatus of academia can host them. And all of it is just so, so irretrievably stupid.

Source: Eugyppius: A Plague Chronicle

Comments

Post a Comment